热门话题

#

Bonk 生态迷因币展现强韧势头

#

有消息称 Pump.fun 计划 40 亿估值发币,引发市场猜测

#

Solana 新代币发射平台 Boop.Fun 风头正劲

CodecFlow

AI Operators 和 Robotics on @Solana 的执行层

CA:69LjZUUzxj3Cb3Fxeo1X4QpYEQTboApkhXTysPpbpump

VLAs 仍然非常新,很多人发现很难理解 VLAs 和 LLMs 之间的区别。

这里深入探讨这些 AI 系统在推理、感知和行动方面的不同。第一部分。

让我们分解关键区别,以及围绕 LLM 包装的 AI 代理与使用 VLA 模型的操作代理之间的不同:

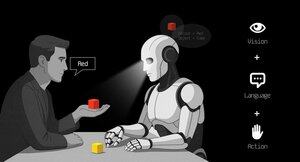

1. 感知:它们如何感知世界

代理(LLM):处理文本或结构化数据,例如 JSON、API,有时还包括图像。就像一个大脑处理干净、抽象的输入。想象一下阅读手册或解析电子表格。适合结构化环境,但受限于输入的数据。

操作员(VLA):从摄像头获取原始实时像素,以及传感器数据(例如触摸、位置)和本体感知(对运动的自我意识)。就像用眼睛和感官在世界中导航,适应动态、混乱的环境,如用户界面或物理空间。

2. 行动:它们如何互动

代理:通过调用函数、工具或 API 来行动。想象它像一个经理发送精确的指令,比如“通过 Expedia API 预订航班。”这是有意的,但依赖于预构建的工具和清晰的接口。

操作员:执行连续的低级动作,如移动鼠标光标、打字或控制机器人关节。就像一个熟练的工人直接操控环境,适合需要实时精确的任务。

3. 控制:它们如何做出决策

代理:遵循一个缓慢的反思循环:计划、调用工具、评估结果、重复。它是受限于令牌(受限于文本处理)和网络(等待 API 响应)。这使得它在实时任务中显得方法论但缓慢。

操作员:在紧密的反馈循环中进行逐步决策。想象一下一个玩家对屏幕上的内容做出即时反应。这种速度使得流畅的互动成为可能,但需要强大的实时处理能力。

4. 学习数据:什么推动它们的训练

代理:在大量文本语料库、指令、文档或 RAG(检索增强生成)数据集上进行训练。它从书籍、代码或常见问题中学习,擅长对结构化知识进行推理。

操作员:从演示(例如人类执行任务的视频)、远程操作日志或奖励信号中学习。就像通过观察和实践学习,适合那些明确指令稀缺的任务。

5. 失败模式:它们的弱点

代理:容易出现幻觉(编造答案)或脆弱的长远计划,如果一步失败就会崩溃。就像一个过度思考或误读情况的战略家。

操作员:面临协变量偏移(当训练数据与真实世界条件不匹配)或控制中的累积错误(小错误积累)。就像一个司机在不熟悉的道路上失去控制。

6. 基础设施:它们背后的技术

代理:依赖于提示/路由器来决定调用哪些工具,工具注册表用于可用功能,以及内存/RAG 用于上下文。这是一个模块化的设置,就像一个指挥中心协调任务。

操作员:需要视频摄取管道、实时控制的动作服务器、安全保护以防止有害行为,以及重放缓冲区来存储经验。这是一个为动态环境构建的高性能系统。

7. 各自的优势:它们的甜蜜点

代理:在具有干净 API 的工作流程中占主导地位(例如,自动化业务流程)、对文档进行推理(例如,总结报告)或代码生成。它是结构化、高级任务的首选。

操作员:在混乱、没有 API 的环境中表现出色,如导航笨重的用户界面、控制机器人或处理游戏般的任务。如果涉及与不可预测系统的实时互动,VLA 是王者。

8. 心智模型:规划者 + 执行者

将 LLM 代理视为规划者:它将复杂任务分解为清晰、逻辑的目标。

VLA 操作员是执行者,通过直接与像素或物理系统互动来执行这些目标。一个检查者(另一个系统或代理)监控结果以确保成功。

$CODEC

1.19K

Codecflow Optr 提供了一种统一的方法来构建能够在数字和物理环境中观察、推理和行动的智能体。无论是自动化桌面工作流程、控制机器人手臂,还是在模拟中进行测试,它都使用相同的思维模型和基本元素。

Louround 🥂2025年8月21日

Dips in a bull market are meant to be bought, especially on projects with big catalysts

We all know that AI is the narrative of this cycle, started by ai16z and Virtuals last year.

My bet is that the market will focus on more complex and sophisticated technologies such as VLAs, and let me tell you why.

LLMs (Large Language Models) mainly read and write text: they’re great at explaining, planning, and generating instructions, but they don’t by themselves control motors or interact with the physical world (as you may have experienced with chatgpt).

VLAs (Vision Language Action models) differ from LLMs as they are multimodal systems that look at things (vision), understand instructions (language), and directly produce actions. It's like telling a robot to pick up a red cup and then moving its arm to do it.

VLAs are trained on examples that pair images/video + instructions + real action traces (how a robot actually moved), and they must run fast and safely in real time. LLMs on their side are trained on huge text collections and focus on reasoning and language tasks.

TL;DR LLMs think and speak whil VLAs see, reason, and act.

As you can see, VLAs are a major addition to LLMs and will notably enable the next 0 to 1 innovation in the overall economy that will be robotics. A majority of investment funds are allocating a large part of their investments into this sector, seen as the next logical evolution in the AI industry.

I already made a post a while ago on the current leader in the crypto market, @codecopenflow, which did not raise capital (fair launch) yet is shipping cutting-edge products and currently sitting at $23M FDV.

For information, other crypto competitors raised $20m ( @openmind_agi) at what is probably a $200M to $300M ++ FDV while no product or community has been built and shipped yet.

What makes Codec a leading project in the sector is that they tackle a crucial bottleneck in robotics and AI, which is the difficulty to have all the AI tools interact together. Let me explain.

Their latest release, OPTR (operator), is a toolkit that helps build operators capable of interacting on multiple platforms such as robots, desktops, browsers, or simulations. The objective of an operator is to see, reason, and act (VLA) in both digital (computers) and physical (robots) worlds.

This toolkit serves as core infrastructure for robotic teams aiming to test their product and enhance the overall process by providing a unified experience instead of separate ones for web browsers, simulations, or robots. This essentially makes the operator adaptive and autonomous regardless of its environment.

So you get it, it will save big time for companies and developers who previously had to go through each step manually and where you can save time you can save money.

It will also enable Codec to build their own operator projects and launch new capacities relatively fast onto the market, notably through their marketplace.

TL;DR: You probably have seen videos of robots folding tissues, sorting boxes, or jumping on various elements. They have all been trained for this very specific use case, and unfortunately, one skill cannot be re-used in another environment like a human could do. OPTR from Codec solves this by making skills transferrable among environments and situations, making training and development a lot faster and cheaper for enterprises.

This is why Codec is so interesting in unifying the digital world with the physical world.

$CODEC, Coded.

1.18K

热门

排行

收藏